Version 1.0 of The State Decoded is now available on GitHub. This marks the first official stable release of The State Decoded, which is now feature-complete.

Here are some of the highlights:

Pinned Laws

Laws can now be “pinned” to save a list of favorite laws to review later. The pinned laws list is available from the main navigation, and to pin a new law either click the pin icon on the law page, or press the “p” key on your keyboard.

Better Support for Inconsistent Data

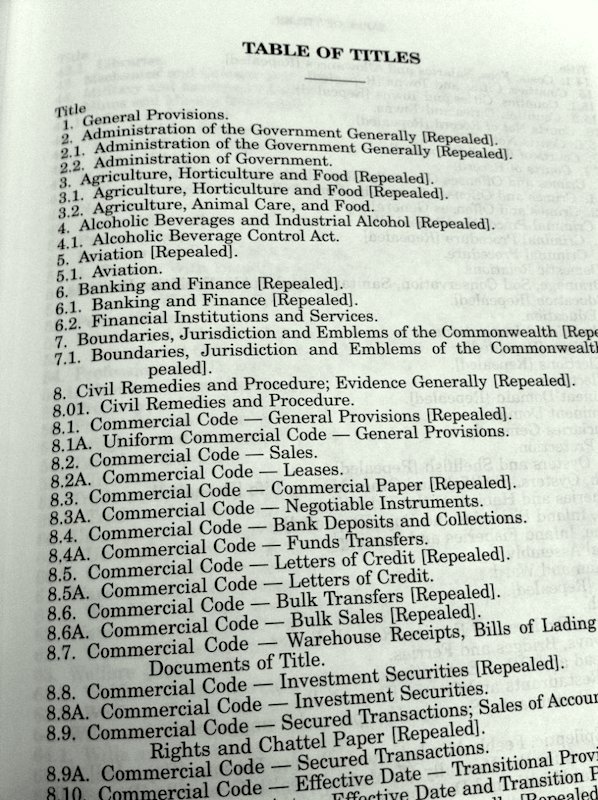

A major change is the ability to have laws that share a section number. In response to law codes which have multiple sections with identical identifiers (such as Virginia’s § 16.1-69.6:1), The State Decoded will now display all laws that match a given identifier on a single page. This removes the previous functionality where only one of these laws would be able to be viewed, effectively hiding the others.

There is also now better handling of sections where only some paragraphs have been repealed. Where previously the numbering would have led to inconsistencies in labelling, we can now effectively show this text as it was originally represented.

Import & Export Improvements

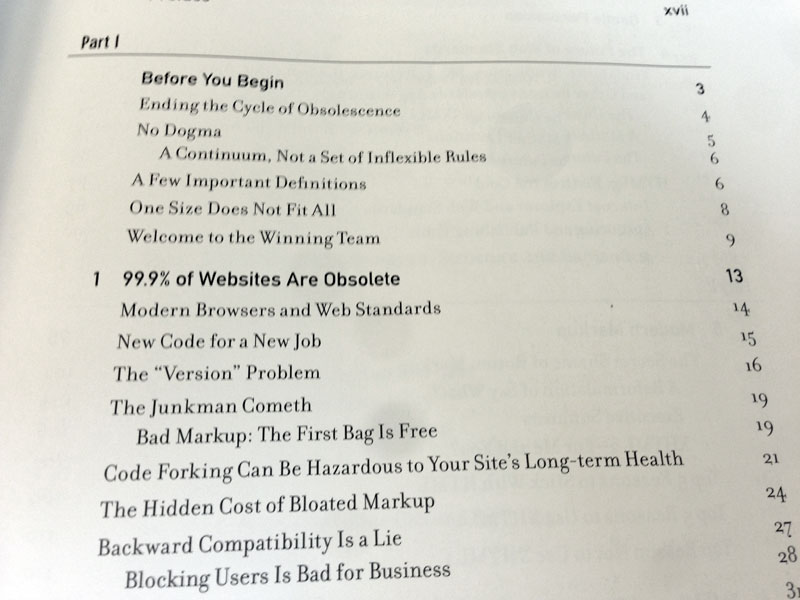

The import engine has been overhauled to use a different XML parser, resulting in better handling of mixed content and structured data. Structural units that do not have their an identifier or number are also now supported, as well as legal codes that do not have a uniform, consistent structure.

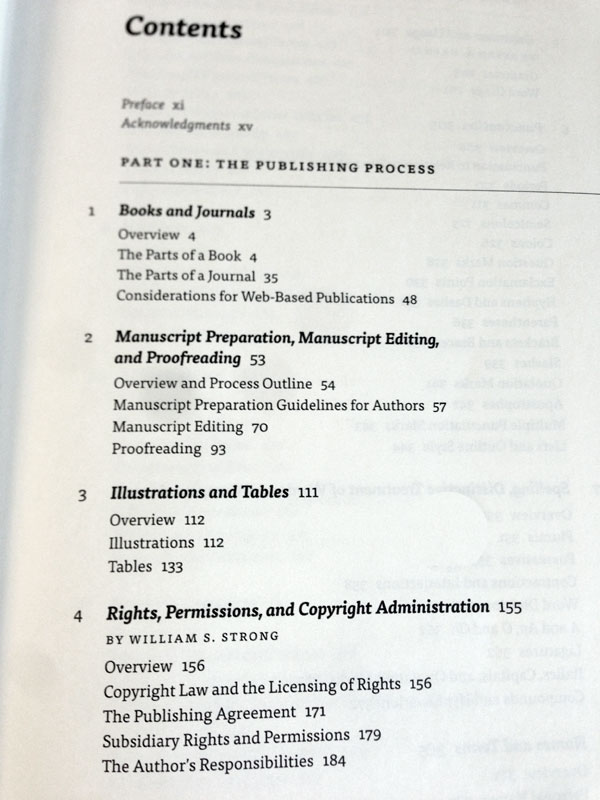

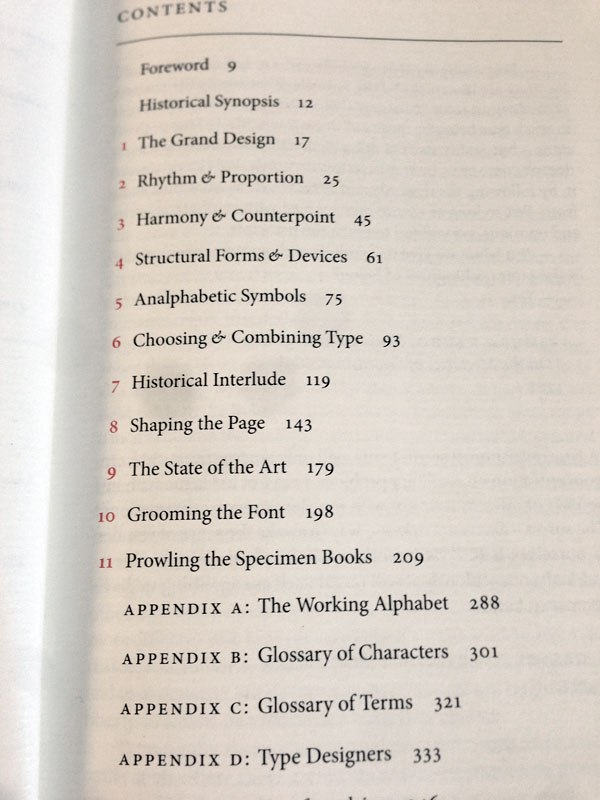

The export system has also been reworked to use a plugin-based architecture, making it easy to add new file download formats to the system. PDF, Word, and EPUB downloads are now also included by default.

Improved Command Line Tool

The command line tool can now be used to import data, manage editions, and test the environment without using the web interface. This makes it much easier to automate updating data.